Computational evolution, variational MARL and XAI

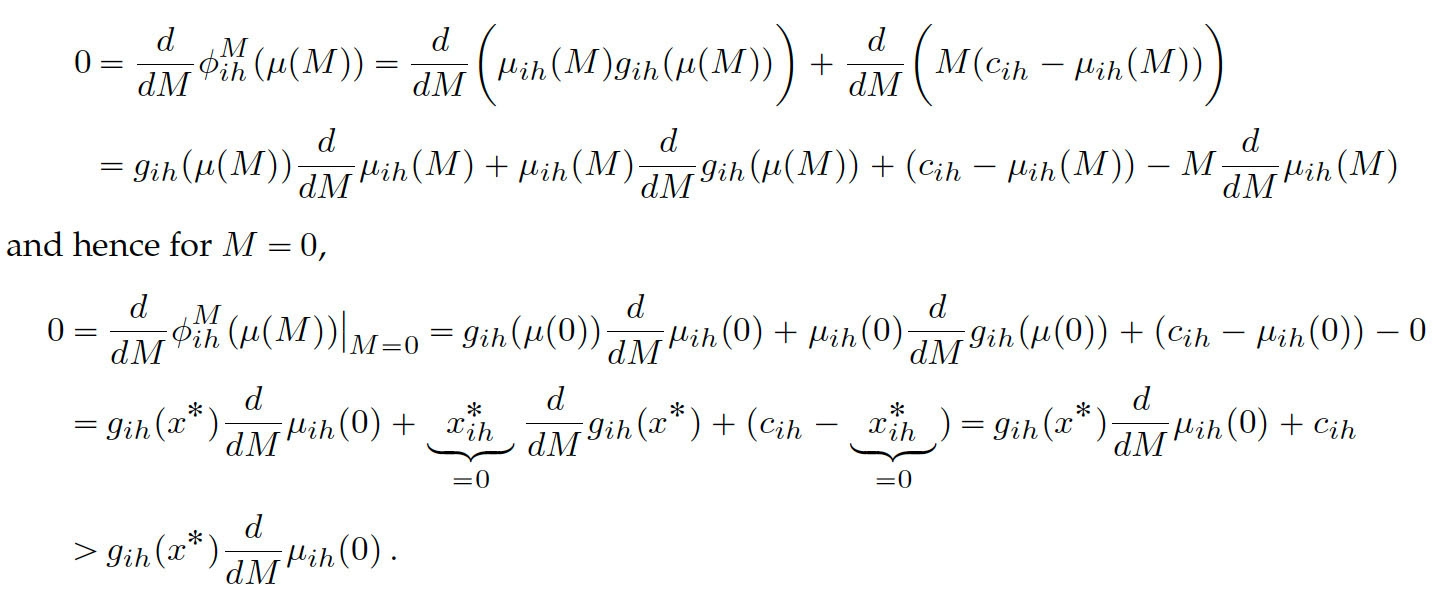

The multi-population replicator dynamics (RD) is a dynamic approach to coevolving populations and multi-player games. In general, not every equilibrium is a Nash equilibrium of the underlying game, and convergence is not guaranteed. In particular, no interior equilibrium can be asymptotically stable in the multi-population RD, resulting, e.g., in cyclic orbits around a single interior NE. We introduce a new notion of equilibria of RD, called mutation limits, which is invariant under the specific choice of mutation parameters. We prove the existence of mutation limits for a large class of games, and consider a particularly interesting subclass, called attracting mutation limits, which offer approximate solution even if the original dynamic is not convergent. Thus, mutation stabilises the system in certain cases and makes attracting mutation limits near-attainable. We observe that mutation limits have some similarity to Q-learning in multi-agent reinforcement learning. We are interested in investigating such relationship in the context of variational principles.

Featured publication:

Bauer, J., Broom, M. and Alonso, E. (2019). The stabilization of equilibria in evolutionary game dynamics through mutation: mutation limits in evolutionary games. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 475(2231). doi:10.1098/rspa.2019.0355.