Quality control and motion correction for cardiac MR images

The effectiveness of a cardiac MR scan depends on the ability of the operator to correctly tune the acquisition parameters to the subject being scanned and on the potential occurrence of imaging artefacts such as cardiac and respiratory motion.

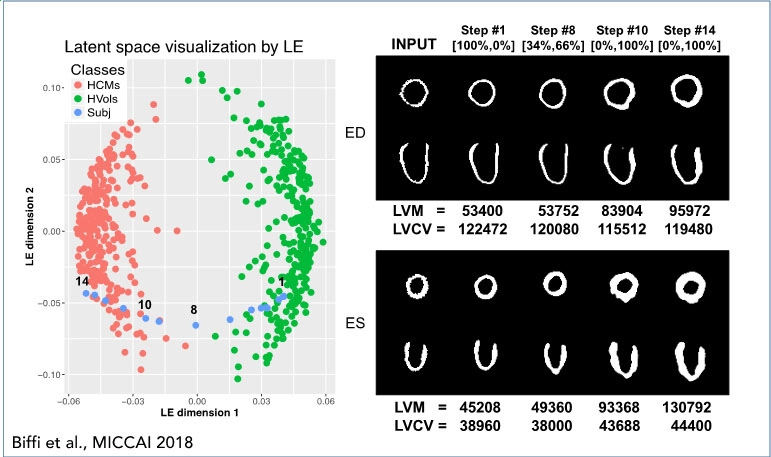

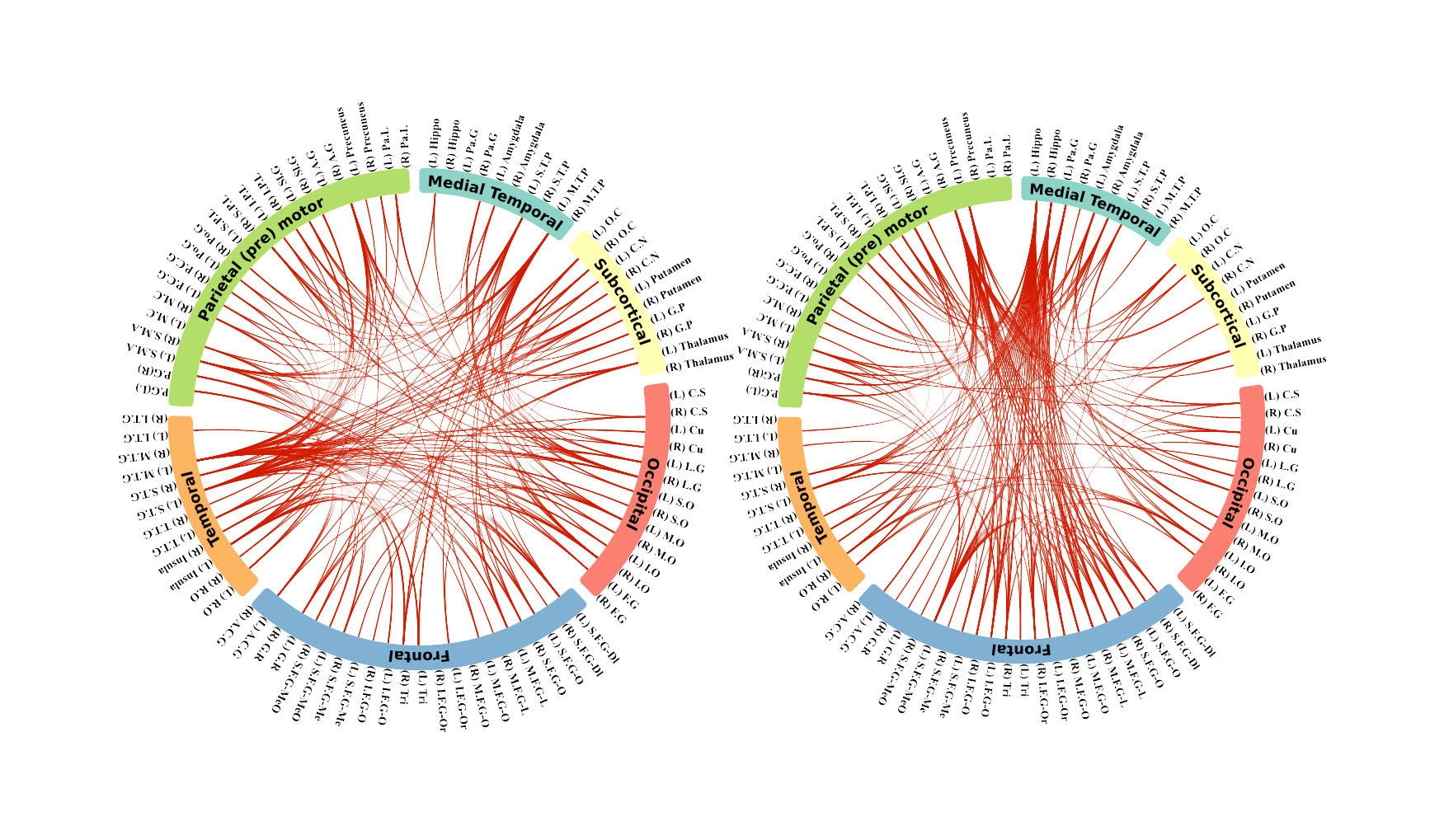

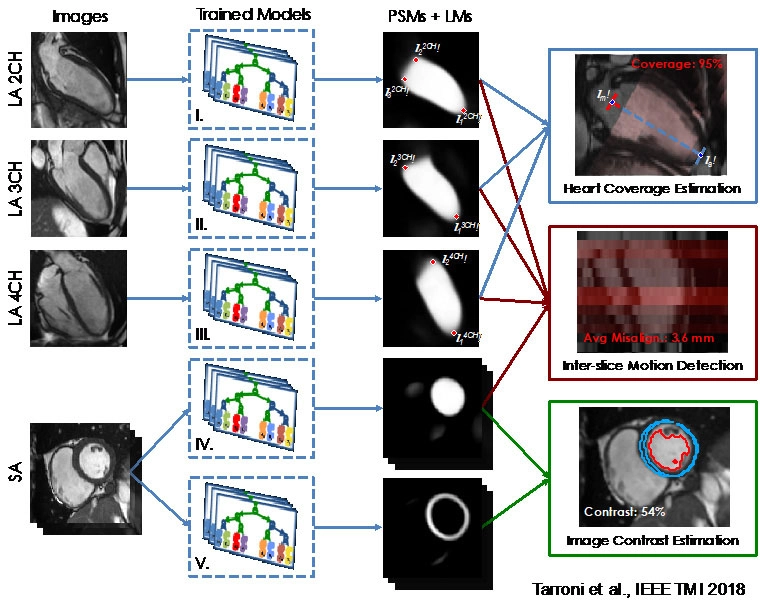

Together with colleagues at Imperial College, we developed a learning-based, fully-automated, AI-based quality control pipeline for cardiac MR short-axis image stacks. Our pipeline performs three important quality checks: 1) heart coverage estimation (to detect if the whole left ventricle, LV, has been imaged), 2) inter-slice motion detection (to estimate potential slice misalignments in the stack caused by respiratory motion and correct them), 3) image contrast estimation in the cardiac region (to estimate intensity differences between the LV cavity and myocardium). The pipeline was successfully validated on 3’000 cardiac MR scans of the UK Biobank dataset. It is currently being deployed on a larger UK Biobank cohort (~30’000) scans to detect potential factors associated with lower quality scans.

Featured publications:

Tarroni, G., Oktay, O., Bai, W., Schuh, A., Suzuki, H., Passerat-Palmbach, J. … Rueckert, D. (2019). Learning-based quality control for cardiac MR images. IEEE Transactions on Medical Imaging, 38(5), pp. 1127–1138. doi:10.1109/TMI.2018.2878509.

Tarroni, G., Oktay, O., Sinclair, M., Bai, W., Schuh, A., Suzuki, H. … Rueckert, D. (2018). A comprehensive approach for learning-based fully-automated inter-slice motion correction for short-axis cine cardiac MR image stacks. https://arxiv.org/abs/1810.02201.